Artificial intelligence (AI) is rapidly reshaping how people learn, teach, and create. In education, this change has brought both excitement and uncertainty. Many educators are asking what the acceptable use of AI really means. Concerns range from its potential to disrupt learning to the risk of reducing academic integrity in student work.

Over the past year, the research team at ICEP Europe has contributed to the Erasmus+-funded AI Reshapes EDucation (AIRED) project. The project brings together partners from France (HAIKARA and ICN) and Spain (AEG). Its goal is to support educators by offering practical guidance, essential skills, and a clear ethical code for the responsible, legal, and inclusive use of AI in classrooms.

Key Findings from Our Research

ICEP Europe led Work Package 4 of the AIRED project. This stage focused on three main aims:

(a) analysing the risks of using AI in education;

(b) creating a framework for inclusive AI design and implementation; and

(c) defining best practices that promote ethical and inclusive use.

Through desk research, interviews, and an online survey with educators, several important risks were identified.

Certain AI tools, such as ChatGPT, can lower academic integrity and increase plagiarism.

Both teachers and students may become overly dependent on AI, which could affect educators’ job security.

Privacy and data protection are at risk, especially if inputting student data.

AI models may commonly produce inaccurate outputs that disseminate incorrect information to users.

Using AI-generated content in academic work raises copyright and intellectual property issues.

Many educators lack the expertise and confidence required to implement AI in their teaching practice.

For a detailed discussion of these risks, see D4.1. Mapping AI Risks in Education and Training.

To explore solutions and best practices, review D4.2. Defining Best Practices for Ethical AI Use: Guidelines for Mitigation.

How Educators Are Using AI

Despite these concerns, several educators appear to actively use AI. Out of the 39 teachers surveyed across Ireland, approximately 23.1% stated they used AI on a daily basis in their work, while 30.7% stated they used AI on a weekly basis. Additionally, 87.1% of educators expressed a moderate to strong interest in using AI in their teaching/training/management activities going forward. These figures illustrate AI’s growing presence across Irish classrooms, with teachers equally partaking along with students.

These numbers show how quickly AI is becoming part of Irish education. However, its use remains uneven and largely unregulated.

Educators also described barriers that limit effective AI adoption:

The need for clear, up-to-date guidelines for choosing and using AI in teaching and assessment.

A lack of affordable and accessible training designed for busy teachers.

Technical problems such as generic outputs, uneven quality, and poor translation into minority languages like Irish.

Limited access to inclusive or assistive AI systems, often because of high costs or restrictive design.

Further details are available in D4.4. Guidelines on the Ethical and Inclusive Use of AI in Education and Training.

Implementing Inclusive and Ethical AI Practices

ICEP Europe’s research also examines AI’s positive potential. When used well, AI can streamline teachers’ work, expand learning opportunities, and build digital literacy for all learners. At the same time, we have been conscious of designing guidelines which mitigate risks associated with the misuse of AI.

This objective is explored in D4.3. A Framework for Inclusive Design.

Through analysis of educator challenges and collaboration with special education experts, the team developed a framework to help both AI developers and educators design and select tools that are ethical, inclusive, and pedagogically sound.

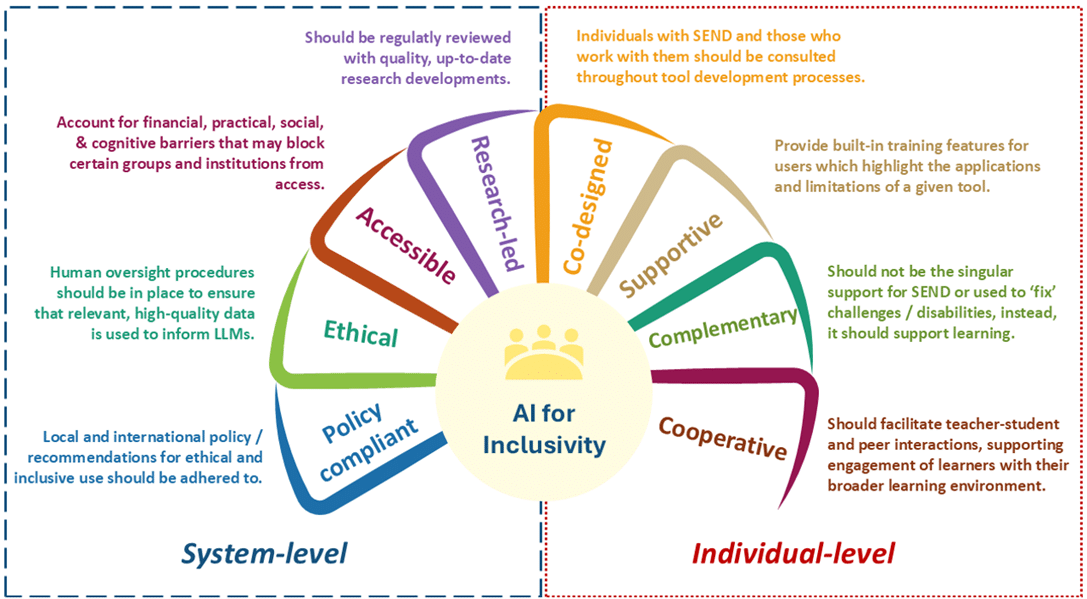

System-Level and Individual-Level Principles

The framework highlights eight key areas for inclusive AI design and use.

At the system level, it covers principles for policymakers and institutions, such as regulations, internal policies, and broader design standards.

At the individual level, it offers guidance for AI designers and teachers to ensure that their tools support inclusion and ethical practice.

Together, these two levels form a holistic view of how AI should be applied in education. The approach encourages collaboration among educators, policymakers, administrators, parents, and all other stakeholders involved in the learning process.

AI as a Tool for Support, Not Replacement

At the base of it all, the framework operates under one key principle: AI tools should aim to support students’ unique needs, helping them meaningfully engage with learning and get the most out of their education. It should not be designed with the express purpose of ‘erasing’ their needs/disabilities, but rather, it should facilitate the support they receive for their unique needs and experiences. By consciously applying these principles to pedagogical AI tools, designers and educators can work on enhancing—and not replacing—the organic, human-centred classroom experience.

Acknowledgement

This research was carried out as part of the AI Reshapes EDucation (AIRED) project and funded under Erasmus+ Key Action 2.